A CNN anchor gestured with her right hand, moving it away from the center of her body to the left, as she gave updates from the Centers for Disease Control and Prevention during an Ebola crisis in 2014.

UCLA statistics and communication studies professors are working to annotate thousands of hours of news clips by labeling distinct body language gestures, said Francis Steen, an associate professor of communication studies.

Steen added the CNN anchor gestured away from herself to emphasize a contrast, communicating the CDC’s view was controversial, though the words she used seem neutral.

Researchers are using the UCLA Library Broadcast NewsScape video archive to break down and analyze social and political news clips, said Song-Chun Zhu, the project’s principal investigator and a professor of statistics and computer science. NewsScape is an archive of digitized broadcast and cable television news programs that date back to 2005, Steen said.

A $1.8 million grant from the National Science Foundation funds the program.

Researchers and students working on the project aim to separate video clips into categories and make them searchable using key words, said Weixin Li, a doctoral student in computer science who works on the project.

Steen said the NewsScape project’s core goal is to develop a better understanding of human communication, and facilitate collaboration among social and political scientists, statisticians, linguists and computer scientists.

Annotations are added to a database, and computers use the data to automatically parse and label parts of the videos.

“By programming computers to watch the news for us, we can have them look for things we’re interested in – certain words, faces, objects, gestures or topics – and discover the patterns of persuasion that you likely wouldn’t notice just by sitting down and watching the news,” he said.

Steen said it has been difficult to determine how the coverage of an event or a politician changes public opinion, because effective communication in the media uses both written and spoken language, as well as moving images.

Tim Groeling, chair and professor of communication studies, said he thinks an older archive of news programs that have not been digitized is at risk of being lost. As he continues to digitize older content, thousands of hours of videos dating back to the 1970s can also be used for the NewsScape project.

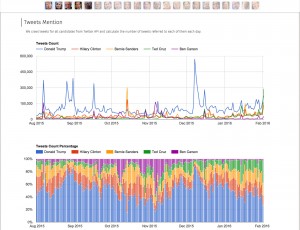

Zhu said researchers used NewsScape data to launch Viz 2016, a website that focuses on the 2016 U.S. presidential election, to better analyze and understand social and political news.

“We use software tools to label certain images with words or phrases and categorize them by topic,” he said.

Viz 2016 will analyze how candidates talk about different issues and how several major networks report on each candidate, he said. The site is automatically updated every week.

“The way an image is presented, as well as the body language of the person on-screen, can often have a lot to do with hidden bias expressed through things like gestures or tone of voice,” Zhu said.

The Viz 2016 site also looks for differences between how things were covered in the traditional media, and how social media responded to the coverage, he added.

For example, the site tracks the tone of tweets about particular candidates over time, using a negative to positive scale. It also tracks the total number of times candidates are mentioned in tweet, as well as how often candidates are mentioned on major news networks.

“Our work will make it easier to conduct searches, find patterns and analyze how the news is covered,” Zhu said.

Zhu said researchers plan to analyze previous elections and draw comparisons to the current election as the digital library of archived footage grows.

“Our memories as voters can be short, and we often don’t realize how coverage of past elections by the media influences our perceptions of candidates and issues,” he said. “(We don’t realize) how little our perceptions change over time.”